Suppose that $X_1$ and $X_2$ are independent random variables such that $X_1+X_2$ is defined. Let $\sigma_1$ and $\sigma_2$, and $\sigma$ be the standard deviations of $X_1$, $X_2,$ and $X_1+X_2$ respectively. Then $\sigma^2=\sigma_1^2+\sigma_2^2$ (the numbers $\sigma_1^2,$ $\sigma_2^2$, and $\sigma^2$ are called the variances of $X_1,$ $X_2$, and $X_1+X_2$ respectively). This looks very Pythagorean Theorem (PT) like. In fact, at least one person calls it the Pythagorean Theorem of Statistics (PToS).

In the link, the author gives a proof of the PToS, but the proof doesn't look much like the proof for the PT. Nevertheless, the similarity is hard to ignore. So I wonder, is the PToS just the PT dressed up in statistical clothing, or is it merely a coincidence that the similarity exists?

I suspect it's the former, but I don't quite see the connection yet. The PT is about right triangles in a plane, and I don't see what that plane would be for the PToS, nor what the triangle is, nor why the third side of that triangle should be related to $X_1+X_2$. The other author doesn't seem to be aware of a connection either, since none of the reasons he gives for calling it the PToS are "because it is the Pythagorean Theorem."

Update:

My initial instinct was to represent $X_1$ and $X_2$ with a pair of orthogonal axes using $(\mu_1,\mu_2)$ as the origin, where $\mu_1$ and $\mu_2$ are the means of $X_1$ and $X_2$ respectively. If we let $\arrow x_1=(\sigma_1,0)$ and $\arrow x_2=(0,\sigma_2)$, then we could represent $X_1+X_2$ with the line through $(\mu_1,\mu_2)$ with the direction vector $\arrow x_1+\arrow x_2$. The length of $\arrow x_1+\arrow x_2$ is $\sigma=\sqrt{\sigma_1^2+\sigma_2^2}.$ Therefore, $\sigma^2={\sigma_1^2+\sigma_2^2}.$ So we get the Pythagorean identity.

This isn't a geometric proof of the Pythagorean Theorem of Statistics, though. At best, it is an illustration of the Pythagorean Theorem of Statistics by the Pythagorean Theorem from geometry. It is perhaps natural to represent $X_1$ and $X_2$ by orthogonal axes. Representing $X_1+X_2$ by the line in the direction of $\arrow x_1+\arrow x_2$ was forced to make the geometry work. It's not as natural. The more natural thing to do is represent $X_1+X_2$ by a third axis orthogonal to both $X_1$ and $X_2$. Also, I do not see how the statistical theorem would follow from the geometrical illustration.

Randy Elzinga's mathematics blog. Graph theory, algebra, and real life. Not peer reviewed.

Wednesday, 30 April 2014

Sunday, 9 March 2014

Competition and the Logistic Distribution

In my recent post on the other bell curves, I wondered if there was some familiar processes underlying the logistic distribution that would parallel coin-flipping for the normal distribution. I noted there that logistic distribution works better for the ELO rating system than the normal distribution did.

I also noted that the curve that describes the logistic distribution arises from population growth models that account for competition for resources. The ELO rating system is also used in the context of competition, but for games. I wondered whether there was something to this. Was it a mere linguistic coincidence, or was there a deeper connection?

Well, I still don't have a complete answer, but I can see at least one connection between the two scenarios. In both cases, there is competition for a finite resource. For the population growth model it is food supply, and for games, it is wins.

The parallel is not exact, however. In particular, the logistic model is used to describe the size of a population, but for a game, the logistic distribution is only used to compute the probable outcomes within a set of competitors, not the number of competitors. So the connection is not complete.

I also noted that the curve that describes the logistic distribution arises from population growth models that account for competition for resources. The ELO rating system is also used in the context of competition, but for games. I wondered whether there was something to this. Was it a mere linguistic coincidence, or was there a deeper connection?

Well, I still don't have a complete answer, but I can see at least one connection between the two scenarios. In both cases, there is competition for a finite resource. For the population growth model it is food supply, and for games, it is wins.

The parallel is not exact, however. In particular, the logistic model is used to describe the size of a population, but for a game, the logistic distribution is only used to compute the probable outcomes within a set of competitors, not the number of competitors. So the connection is not complete.

Labels:

logistic

,

Mathematics

,

probability

Thursday, 6 March 2014

Understanding Self-Information

I've never taken a course in information theory, but recently I've been trying to learn more about it on my own. I reached a satisfactory understanding of most of the concepts, but an acceptable understanding of self-information proved elusive for quite a while. If it were just another term of many, I might have been content to ignore it, but the definitions of all of the other terms rest on this concept, so I didn't consider ignoring it to be an option.

Most introductory texts on information theory that I've seen take the definition as axiomatic. If an event occurs with probability $p$, then self-information of that event is defined to be $-\log p$, where the logarithm is to some chosen base (often 2). What I wanted to understand better is why the self-information is defined that way. In my experience so far, this is rarely explained and never explained well.

It did make some intuitive sense, as various authors claim. One property of this definition is that the self-information of an event increases as the probability decreases. This is a reasonable property (I would even say necessary) for any self-information function. Suppose, for example, that we are playing a game of hangman. The letter "z" is far less frequent in English than the letter "s", so if a "z" is showing, then it is easier to narrow down the word than it would be if an "s" were showing. Thus, the rare event "contains the letter 'z'" gives more information than the common event "contains the letter 's'."

But $-\log p$ is not the only function that works like that. We could also use $1-p,$ which is far simpler and avoids the dreaded logarithms. Simple is always preferred to complicated, so there must be a reason why $-\log p$ is the function of choice.

Well, in order for information to be transmitted, there must be some way to represent it. Spoken languages use sounds. Written languages use words with letters some alphabet. Computers use strings of 0s and 1s, which we could think of as words from a two letter alphabet.

The number of possible strings (words) of length $k$ from an alphabet with $n$ symbols is $n^k$. Let's represent this number by $w$, so $w=n^k.$ We could also write this relationship between $w,$ $n$, and $k$ with the equation $k=\log_n w$. Although this equation is equivalent to $w=n^k$, we can interpret it differently as follows. If we want to assign unique labels to $w$ different objects using strings of the same length from an alphabet of size $n$ (that's a mouthful!), then $k$ is the smallest possible length of the strings.

Now, if these $w$ strings are chosen randomly with equal probability, say $p$, then $p=1/w$. Therefore, $w=1/p,$ and so $k=\log_n \frac{1}{p}=-\log_n p$. Aside from the base appearing explicitly in this expression for $k$, it is identical to the self-information. So the self-information of an event with a given probability $p$ can be interpreted as the length of a string that is needed to represent that event if it is one of many events that all occur with equal probability. Of course, it is possible that some event other than $w$ would have a different probability, but that doesn't change the amount of information provided by by the occurrence of $w$.

I don't know if this (rather long-winded) explanation makes the definition any more sensible for you, the reader. But once I arrived at this realization, I finally felt like I had understood why self-information is defined that way it is.

-----------------

Another difficulty that I had with the definition is the probabilistic model through which is usually explained. I can see why using this model is sensible from the perspective of the working information theorist. But as someone trying to learn about it, I couldn't see how a randomly transmitted symbol could provide any information at all. If I flip a coin and it shows up heads, so what, even if it's an unfair coin and heads is rare? And information is not usually transmitted randomly anyway.

Most introductory texts on information theory that I've seen take the definition as axiomatic. If an event occurs with probability $p$, then self-information of that event is defined to be $-\log p$, where the logarithm is to some chosen base (often 2). What I wanted to understand better is why the self-information is defined that way. In my experience so far, this is rarely explained and never explained well.

It did make some intuitive sense, as various authors claim. One property of this definition is that the self-information of an event increases as the probability decreases. This is a reasonable property (I would even say necessary) for any self-information function. Suppose, for example, that we are playing a game of hangman. The letter "z" is far less frequent in English than the letter "s", so if a "z" is showing, then it is easier to narrow down the word than it would be if an "s" were showing. Thus, the rare event "contains the letter 'z'" gives more information than the common event "contains the letter 's'."

But $-\log p$ is not the only function that works like that. We could also use $1-p,$ which is far simpler and avoids the dreaded logarithms. Simple is always preferred to complicated, so there must be a reason why $-\log p$ is the function of choice.

Well, in order for information to be transmitted, there must be some way to represent it. Spoken languages use sounds. Written languages use words with letters some alphabet. Computers use strings of 0s and 1s, which we could think of as words from a two letter alphabet.

The number of possible strings (words) of length $k$ from an alphabet with $n$ symbols is $n^k$. Let's represent this number by $w$, so $w=n^k.$ We could also write this relationship between $w,$ $n$, and $k$ with the equation $k=\log_n w$. Although this equation is equivalent to $w=n^k$, we can interpret it differently as follows. If we want to assign unique labels to $w$ different objects using strings of the same length from an alphabet of size $n$ (that's a mouthful!), then $k$ is the smallest possible length of the strings.

Now, if these $w$ strings are chosen randomly with equal probability, say $p$, then $p=1/w$. Therefore, $w=1/p,$ and so $k=\log_n \frac{1}{p}=-\log_n p$. Aside from the base appearing explicitly in this expression for $k$, it is identical to the self-information. So the self-information of an event with a given probability $p$ can be interpreted as the length of a string that is needed to represent that event if it is one of many events that all occur with equal probability. Of course, it is possible that some event other than $w$ would have a different probability, but that doesn't change the amount of information provided by by the occurrence of $w$.

I don't know if this (rather long-winded) explanation makes the definition any more sensible for you, the reader. But once I arrived at this realization, I finally felt like I had understood why self-information is defined that way it is.

-----------------

Another difficulty that I had with the definition is the probabilistic model through which is usually explained. I can see why using this model is sensible from the perspective of the working information theorist. But as someone trying to learn about it, I couldn't see how a randomly transmitted symbol could provide any information at all. If I flip a coin and it shows up heads, so what, even if it's an unfair coin and heads is rare? And information is not usually transmitted randomly anyway.

Labels:

Information theory

,

self-information

Tuesday, 25 February 2014

The other bell curves

After lines, conic sections (circles, parabolas, etc.), and graphs of trigonometric functions, the most familiar curve has to be the bell curve. Perhaps it is even more famous than some or all of those other curves, especially amongst students who just finished an exam that they don't feel good about.

Explaining exactly why we use the bell curve takes some work, but a rough explanation can be given in terms of coin flips. If you flip a coin say 20 times, then you can expect to get 20 heads with a certain probability, 19 heads and 1 tail with a certain probability, 18 heads and 2 tails with a certain probability, and so on. This is an example of a binomial distribution. If you plot these percentages, you get a shape that looks roughly like the bell curve. For 30 coin flips, the shape looks even more like the bell curve.

In fact, you can make the shape looks as close to the bell curve as you want by considering a large enough number of coin flips. The coin doesn't even need to be fair. That is, it could favour one of heads or tails more than the other, and with enough coin flips, you could still make the shape look as much like the bell curve as you like.

So lurking behind many things that give a shape that looks like the bell curve, there is a process something like a coin flip going on. This is an oversimplification, of course. There are other ways to reach the bell curve besides coin flips. The whole story would take a while to explain, however. The point is that we can arrive at the bell curve through a simple, familiar process.

The most famous bell curve is not the only bell curve, though. The one I'm talking about above is known as the Normal Curve or Gaussian Curve [1], but there are other curves besides these that have a bell shape, but aren't Normal Curves.

One curve comes from the logistic distribution. Another curve comes from the Cauchy distribution. What I wonder about these curves is this. Is there is some way to arrive at either of these distributions from some simple process in the same way that we can arrive at the normal distribution through the process of coin flips?

One place where the logistic distribution crops up is in the ELO rating system. This system was developed by a physicist named Arpad Elo (for some reason, his name gets capitalized, as if it's an acronym, when it comes to the rating system) to rate chess players, though it can be used for any type of competition, from individual games like chess to team sports, like soccer. Each competitor has a rating, and the probability that one competitor will beat another can be calculated from their ratings. The calculation depends a probability distribution. In the original scheme, a normal distribution was used, but it was discovered that a logistic distribution gave more accurate predictions.

In fact, it was this application of the logistic distribution that led me to my question above. The empirical evidence suggests that it's the better choice, but I want to know if there is a reason why. Is there some sort of logistic equivalent to coin flipping for the normal distribution that explains why predictions are better with the logistic distribution?

Logistic curves also pop up in some population growth models. There are continuous and discrete versions of these models, which in a sense parallel the normal curve (continuous) and the coin flips (discrete). Perhaps the answer to my question lies here, but I can't see the connection between population growth and games. (Both do involve competition, I suppose, but it's not clear to me whether or not this is a meaningful connection, or just a linguistic coincidence.)

The Wikipedia article for the Cauchy distribution says that "it has the distribution of a random variable that is the ratio of two independent standard normal random variables." That suggests that ratios of two independent binomial random variables (coin flips, for example) could be an avenue to explore.

It's also not hard to create other bell curves. Take any function $f(x)$ that is positive, symmetric about some vertical axis, and concave up. Then $1/f(x)$ will give a bell curve. I expect that most of these aren't interesting, though. Are there any others, besides the 3 mentioned above, that are?

[1] It's actually a class of curves that are related to each other by stretching and shifting vertically and horizontally.

Explaining exactly why we use the bell curve takes some work, but a rough explanation can be given in terms of coin flips. If you flip a coin say 20 times, then you can expect to get 20 heads with a certain probability, 19 heads and 1 tail with a certain probability, 18 heads and 2 tails with a certain probability, and so on. This is an example of a binomial distribution. If you plot these percentages, you get a shape that looks roughly like the bell curve. For 30 coin flips, the shape looks even more like the bell curve.

In fact, you can make the shape looks as close to the bell curve as you want by considering a large enough number of coin flips. The coin doesn't even need to be fair. That is, it could favour one of heads or tails more than the other, and with enough coin flips, you could still make the shape look as much like the bell curve as you like.

So lurking behind many things that give a shape that looks like the bell curve, there is a process something like a coin flip going on. This is an oversimplification, of course. There are other ways to reach the bell curve besides coin flips. The whole story would take a while to explain, however. The point is that we can arrive at the bell curve through a simple, familiar process.

The most famous bell curve is not the only bell curve, though. The one I'm talking about above is known as the Normal Curve or Gaussian Curve [1], but there are other curves besides these that have a bell shape, but aren't Normal Curves.

One curve comes from the logistic distribution. Another curve comes from the Cauchy distribution. What I wonder about these curves is this. Is there is some way to arrive at either of these distributions from some simple process in the same way that we can arrive at the normal distribution through the process of coin flips?

One place where the logistic distribution crops up is in the ELO rating system. This system was developed by a physicist named Arpad Elo (for some reason, his name gets capitalized, as if it's an acronym, when it comes to the rating system) to rate chess players, though it can be used for any type of competition, from individual games like chess to team sports, like soccer. Each competitor has a rating, and the probability that one competitor will beat another can be calculated from their ratings. The calculation depends a probability distribution. In the original scheme, a normal distribution was used, but it was discovered that a logistic distribution gave more accurate predictions.

In fact, it was this application of the logistic distribution that led me to my question above. The empirical evidence suggests that it's the better choice, but I want to know if there is a reason why. Is there some sort of logistic equivalent to coin flipping for the normal distribution that explains why predictions are better with the logistic distribution?

Logistic curves also pop up in some population growth models. There are continuous and discrete versions of these models, which in a sense parallel the normal curve (continuous) and the coin flips (discrete). Perhaps the answer to my question lies here, but I can't see the connection between population growth and games. (Both do involve competition, I suppose, but it's not clear to me whether or not this is a meaningful connection, or just a linguistic coincidence.)

The Wikipedia article for the Cauchy distribution says that "it has the distribution of a random variable that is the ratio of two independent standard normal random variables." That suggests that ratios of two independent binomial random variables (coin flips, for example) could be an avenue to explore.

It's also not hard to create other bell curves. Take any function $f(x)$ that is positive, symmetric about some vertical axis, and concave up. Then $1/f(x)$ will give a bell curve. I expect that most of these aren't interesting, though. Are there any others, besides the 3 mentioned above, that are?

[1] It's actually a class of curves that are related to each other by stretching and shifting vertically and horizontally.

Labels:

cauchy

,

logistic

,

probability

Sunday, 19 January 2014

Paul Revere and Graph Theory

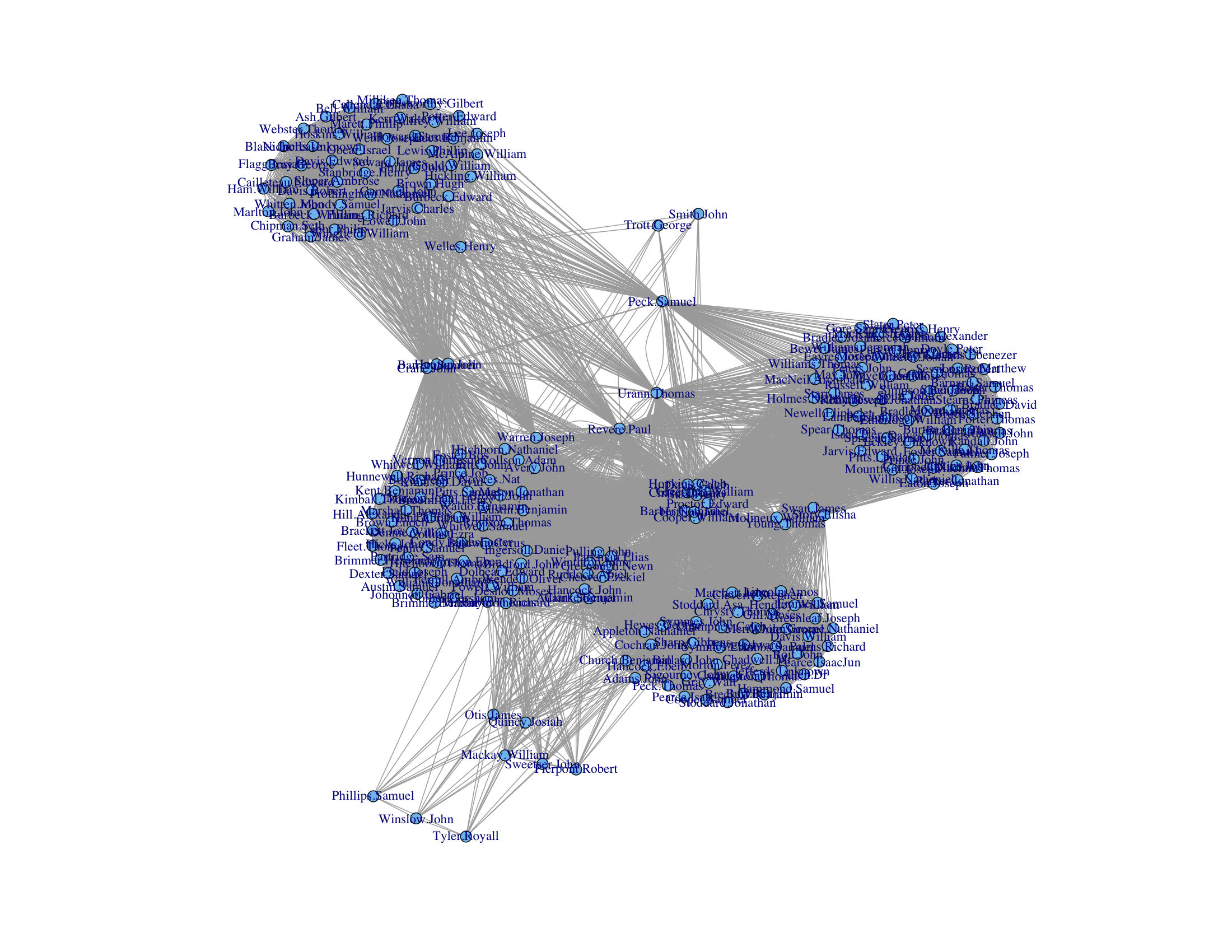

Constructing a graph based on historical data, Kieran Healy explains how we can use the graph to "find" Paul Revere.

I have been asked by my superiors to give a brief demonstration of the surprising effectiveness of even the simplest techniques of the new-fangled Social Networke Analysis in the pursuit of those who would seek to undermine the liberty enjoyed by His Majesty’s subjects. This is in connection with the discussion of the role of “metadata” in certain recent events and the assurances of various respectable parties that the government was merely “sifting through this so-called metadata” and that the “information acquired does not include the content of any communications”. I will show how we can use this “metadata” to find key persons involved in terrorist groups operating within the Colonies at the present time. I shall also endeavour to show how these methods work in what might be called a relational manner.

Saturday, 4 January 2014

When to set office hours

This past term, I was assisting as a tutor for one section of a math

course (I am teaching this course in the present term). The students in

the class wanted to have some extra tutorial time. One of the students

collected information about when everyone was available. It turned out

that there was no common period where all interested students could

come, so there ended up being no extra tutorial.

This was between the professor and the students. I didn't find out about it until after the fact. I assume from the conversation, though, that there was going to be at most one extra tutorial, because the proposal was nixed once it was revealed that there was no common period.

But suppose that the professor was willing to offer a number of tutorials limited only by what periods where either he or I would have been available to lead one (assuming that the tutorials would be held during the day). Then it may still be possible to find a way to accommodate everyone. In fact, it's pretty easy to tell whether or not it is.

We already have a list of which periods students are available. Since at least one of the available tutors (in this case, me and the professor) must be at the tutorial, we can strike from that list all periods where none are available. If everyone is available in at least one of the remaining periods, then all students can be accommodated. Otherwise it's not.

One way to do this is simply hold tutorials in each of those remaining periods. However, professors have many responsibilities besides teaching ("publish or perish" and all that), so it's desirable to use the fewest tutorial periods possible. So how can we do that?

It turns out that this is an application of a well studied problem in combinatorics, known as the set cover problem. Suppose we are given a collection of sets, say $S_1,S_2,\ldots, S_k$. Let $U=\cup_{i=1}^k S_i.$ Suppose that $I$ is a subset of $\{1,2,\ldots,k\}$ and $U=\cup_{i\in I}S_i$. Then $I$ is called a {\em set cover} of $U$. The set cover problem is to minimize the size of $I$.

Now, suppose that the periods are numbered $1,2,\ldots, k$. For each $i$, let $S_i$ be the set of students who are available during period $i$. Then the we can find the fewest tutorial periods needed by finding the smallest set cover of $U$.

Unfortunately, the set cover problem is NP-complete. The formal definition of what this means takes a fair bit of work to explain, so I won't get into it here (if you're interested, follow the link to the topic in the Wikipedia article on the set cover problem). In practical terms, though, it means that there is no currently known way to find the smallest set cover efficiently (and there probably won't ever be). Perhaps the number of students and number of periods is small enough that it is still manageable by computer, or there is some approximation algorithm that will give a near-optimal solution.

This was between the professor and the students. I didn't find out about it until after the fact. I assume from the conversation, though, that there was going to be at most one extra tutorial, because the proposal was nixed once it was revealed that there was no common period.

But suppose that the professor was willing to offer a number of tutorials limited only by what periods where either he or I would have been available to lead one (assuming that the tutorials would be held during the day). Then it may still be possible to find a way to accommodate everyone. In fact, it's pretty easy to tell whether or not it is.

We already have a list of which periods students are available. Since at least one of the available tutors (in this case, me and the professor) must be at the tutorial, we can strike from that list all periods where none are available. If everyone is available in at least one of the remaining periods, then all students can be accommodated. Otherwise it's not.

One way to do this is simply hold tutorials in each of those remaining periods. However, professors have many responsibilities besides teaching ("publish or perish" and all that), so it's desirable to use the fewest tutorial periods possible. So how can we do that?

It turns out that this is an application of a well studied problem in combinatorics, known as the set cover problem. Suppose we are given a collection of sets, say $S_1,S_2,\ldots, S_k$. Let $U=\cup_{i=1}^k S_i.$ Suppose that $I$ is a subset of $\{1,2,\ldots,k\}$ and $U=\cup_{i\in I}S_i$. Then $I$ is called a {\em set cover} of $U$. The set cover problem is to minimize the size of $I$.

Now, suppose that the periods are numbered $1,2,\ldots, k$. For each $i$, let $S_i$ be the set of students who are available during period $i$. Then the we can find the fewest tutorial periods needed by finding the smallest set cover of $U$.

Unfortunately, the set cover problem is NP-complete. The formal definition of what this means takes a fair bit of work to explain, so I won't get into it here (if you're interested, follow the link to the topic in the Wikipedia article on the set cover problem). In practical terms, though, it means that there is no currently known way to find the smallest set cover efficiently (and there probably won't ever be). Perhaps the number of students and number of periods is small enough that it is still manageable by computer, or there is some approximation algorithm that will give a near-optimal solution.

Labels:

combinatorics

,

Mathematics

,

set cover problem

Monday, 30 December 2013

Google "WordRank"

One of the more interesting things I learned about while I was a graduate student was Google's PageRank algorithm (to this day, it remains one of my go-to applications when I want to illustrate the real-life utility of certain concepts in linear algebra classes, although as each year passes, the likelihood that a student can remember life before Google gets smaller). What made it particularly interesting to me is that it could be stated largely in the terminology of algebraic graph theory, my own field of research. I had seen similar ideas before, but I had never seen anything like it put into practice (well, I had seen Google, but I wasn't aware that it was putting these ideas into practice).

The basic idea behind Google's algorithm is that good websites are websites that are linked to by good websites. If you think that sounds awkwardly circular, you wouldn't be wrong. Nevertheless, there's an elegantly simple way to express the idea as a matrix equation, and solutions to this equations are well studied. Things don't always work out as well in practice as they do in the abstract, but Google's search engine was better than any search engines that came before it. In this case, the abstract and practice lined up quite well. In fact, the only one of these older search engines that's still around to this day in any noticeable way is Yahoo, but it has a much smaller share of the search engine market than Google does.

Now, one of my side interests is languages (or linguistics maybe, but I'm not sure how much my forays take me into actual linguistics. Favourite websites/blogs by people who know what they're talking about include Dialect Blog and Language Log). While pursuing this interest, one of the things I've come across is lists of the most common words in a given language. I wonder if something like Google's PageRank algorithm could be used to give an alternate word ranking words. Instead of using links, you could say, in the same awkwardly circular fashion, that important words are words that are used with important words, set it up algebraically, then find the solution. The result would assign a "WordRank" to each word.

What "used with" means is not well defined, mind you. We could say that a word $A$ is used with a word $B$ if $A$ and $B$ are used in the same sentence, or perhaps used within a certain number of words of each other, regardless of whether or not they reside in the same sentence. Maybe there is some other suitable choice.

While I could easily solve the algebra part, setting up the equation would take quite some work, and it might be beyond my abilities. For example, if I chose to say words are used together when they are in the same sentence, I would need to decide whether or not a period in a given text is meant to indicate an abbreviation or the end of a sentence. It's easy enough to do if I'm reading, but I would need to program a computer to do this for the a sufficiently large quantity of text. That strikes me as a nontrivial task.

That wouldn't be a problem if I chose to say that words are used together when they appear within a certain number of words of each other. Suppose this number is $n$. The question arises as to what $n$ should be. The answer is not obvious to me.

Another complication is that spelling alone is not enough to determine what word is being used. For one thing, words can have different forms. Nouns can be plural or singular, verbs can be present tense or past tense, etc, and spellings change as a result. Also, different words can have the same spelling. There might be other issues (how to compensate for typos, maybe). It would take some effort to sort these out, and I wouldn't know where to start. These complications would crop up in producing lists based purely on frequency, though, and these lists already exist. So I would assume somebody already knows how to deal with that.

Once these obstacles are overcome, we could make a matrix $M$. The rows and columns of $M$ are both indexed by words (so the matrix is square). If $v$ and $w$ are words, then $(v,w)$-entry of $M$ is the number of times $v$ appears with $w$ in some corpus. Since $v$ is used with $w$ if and only if $w$ is used with $v$, $M$ is also symmetric. Following the example of the PageRank algorithm, we find an eigenvector ${\bf x}$ of $M$ corresponding to the largest eigenvalue of $M$. Provided that $M$ satisfies certain conditions, ${\bf x}$ will be unique up to a scalar multiple. Furthermore, we can assume that the entries of ${\bf x}$ are all non-negative. (These are consequences of the Perron-Frobenius Theorem). The vector ${\bf x}$ is called the principal eigenvector of $M$. The entries of ${\bf x}$ can be ranked according to their size, with one entry ranked higher than a second if the first entry is greater than the second. The WordRank of word $w$ is then the rank of entry $w$ of ${\bf x}$.

The total number of occurrences of the word $w$ is the row (or column) sum of row $w$ of $M$, which is given by $M{\bf j}$ where ${\bf j}$ is the all-ones vector. Suppose that $D$ is the diagonal matrix where diagonal entry $w$ is the number of occurrences of word $w$. Then column sums of $MD$ are all 1. Since $M$ and $D$ are both nonnegative, $MD$ is as well. Thus, $MD$ is a stochastic matrix, and therefore, the principal eigenvalue is 1. As an alternative to the scheme derived in the previous paragraph, we could use the principal eigenvector of $MD$ instead of $M$ to determine a ranking. The PageRank algorithm is based on the website analogue of this matrix.

In devising the PageRank algorithm, the Google founders found it necessary to modify this matrix further. Perhaps some other modifications to $M$ or $MD$ would be necessary here.

One reason I think this could be interesting is that it's not just based on how common a word is, but how much each word "works" with other words. The top $m$ words using WordRank could provide a more coherent list of words. Of course, a word will get used more if it works well with other words, so any algorithm worthy of the name WordRank could end up producing more or less the same thing as ranking by frequency.

This idea could also be nonsense. However, in the course of learning more about the PageRank algorithm, I came across another paper which generalized the algorithm. In the paper, they applied a variation of PageRank to the problem synonym extraction, with at least modest success. So using these ideas in the realm of languages does not seem particularly absurd.

In any case, I think it would be interesting to see the results.

Possibly there are other things that could be done with the matrices $M$, $MD$, or maybe some other related matrix. When designing language learning resources, it's important to come up with vocabulary lists. We could use frequency or WordRank, or some combination, to compose a these lists. But these lists probably need to be divided into smaller chunks for individual lessons. One would hope that these chunks consist of a coherent set of words, for example, words centred on a theme, like food related words, occupation related words, and so forth.

One could do this by hand, of course, and maybe that's the best way once you decide which words to use. But there are techniques based on eigenvalues and eigenvectors (for example, using the Fiedler vector) that are used to subdivide networks into smaller coherent components. Perhaps some variation of these techniques could be employed here.

The basic idea behind Google's algorithm is that good websites are websites that are linked to by good websites. If you think that sounds awkwardly circular, you wouldn't be wrong. Nevertheless, there's an elegantly simple way to express the idea as a matrix equation, and solutions to this equations are well studied. Things don't always work out as well in practice as they do in the abstract, but Google's search engine was better than any search engines that came before it. In this case, the abstract and practice lined up quite well. In fact, the only one of these older search engines that's still around to this day in any noticeable way is Yahoo, but it has a much smaller share of the search engine market than Google does.

Now, one of my side interests is languages (or linguistics maybe, but I'm not sure how much my forays take me into actual linguistics. Favourite websites/blogs by people who know what they're talking about include Dialect Blog and Language Log). While pursuing this interest, one of the things I've come across is lists of the most common words in a given language. I wonder if something like Google's PageRank algorithm could be used to give an alternate word ranking words. Instead of using links, you could say, in the same awkwardly circular fashion, that important words are words that are used with important words, set it up algebraically, then find the solution. The result would assign a "WordRank" to each word.

What "used with" means is not well defined, mind you. We could say that a word $A$ is used with a word $B$ if $A$ and $B$ are used in the same sentence, or perhaps used within a certain number of words of each other, regardless of whether or not they reside in the same sentence. Maybe there is some other suitable choice.

While I could easily solve the algebra part, setting up the equation would take quite some work, and it might be beyond my abilities. For example, if I chose to say words are used together when they are in the same sentence, I would need to decide whether or not a period in a given text is meant to indicate an abbreviation or the end of a sentence. It's easy enough to do if I'm reading, but I would need to program a computer to do this for the a sufficiently large quantity of text. That strikes me as a nontrivial task.

That wouldn't be a problem if I chose to say that words are used together when they appear within a certain number of words of each other. Suppose this number is $n$. The question arises as to what $n$ should be. The answer is not obvious to me.

Another complication is that spelling alone is not enough to determine what word is being used. For one thing, words can have different forms. Nouns can be plural or singular, verbs can be present tense or past tense, etc, and spellings change as a result. Also, different words can have the same spelling. There might be other issues (how to compensate for typos, maybe). It would take some effort to sort these out, and I wouldn't know where to start. These complications would crop up in producing lists based purely on frequency, though, and these lists already exist. So I would assume somebody already knows how to deal with that.

Once these obstacles are overcome, we could make a matrix $M$. The rows and columns of $M$ are both indexed by words (so the matrix is square). If $v$ and $w$ are words, then $(v,w)$-entry of $M$ is the number of times $v$ appears with $w$ in some corpus. Since $v$ is used with $w$ if and only if $w$ is used with $v$, $M$ is also symmetric. Following the example of the PageRank algorithm, we find an eigenvector ${\bf x}$ of $M$ corresponding to the largest eigenvalue of $M$. Provided that $M$ satisfies certain conditions, ${\bf x}$ will be unique up to a scalar multiple. Furthermore, we can assume that the entries of ${\bf x}$ are all non-negative. (These are consequences of the Perron-Frobenius Theorem). The vector ${\bf x}$ is called the principal eigenvector of $M$. The entries of ${\bf x}$ can be ranked according to their size, with one entry ranked higher than a second if the first entry is greater than the second. The WordRank of word $w$ is then the rank of entry $w$ of ${\bf x}$.

The total number of occurrences of the word $w$ is the row (or column) sum of row $w$ of $M$, which is given by $M{\bf j}$ where ${\bf j}$ is the all-ones vector. Suppose that $D$ is the diagonal matrix where diagonal entry $w$ is the number of occurrences of word $w$. Then column sums of $MD$ are all 1. Since $M$ and $D$ are both nonnegative, $MD$ is as well. Thus, $MD$ is a stochastic matrix, and therefore, the principal eigenvalue is 1. As an alternative to the scheme derived in the previous paragraph, we could use the principal eigenvector of $MD$ instead of $M$ to determine a ranking. The PageRank algorithm is based on the website analogue of this matrix.

In devising the PageRank algorithm, the Google founders found it necessary to modify this matrix further. Perhaps some other modifications to $M$ or $MD$ would be necessary here.

One reason I think this could be interesting is that it's not just based on how common a word is, but how much each word "works" with other words. The top $m$ words using WordRank could provide a more coherent list of words. Of course, a word will get used more if it works well with other words, so any algorithm worthy of the name WordRank could end up producing more or less the same thing as ranking by frequency.

This idea could also be nonsense. However, in the course of learning more about the PageRank algorithm, I came across another paper which generalized the algorithm. In the paper, they applied a variation of PageRank to the problem synonym extraction, with at least modest success. So using these ideas in the realm of languages does not seem particularly absurd.

In any case, I think it would be interesting to see the results.

Possibly there are other things that could be done with the matrices $M$, $MD$, or maybe some other related matrix. When designing language learning resources, it's important to come up with vocabulary lists. We could use frequency or WordRank, or some combination, to compose a these lists. But these lists probably need to be divided into smaller chunks for individual lessons. One would hope that these chunks consist of a coherent set of words, for example, words centred on a theme, like food related words, occupation related words, and so forth.

One could do this by hand, of course, and maybe that's the best way once you decide which words to use. But there are techniques based on eigenvalues and eigenvectors (for example, using the Fiedler vector) that are used to subdivide networks into smaller coherent components. Perhaps some variation of these techniques could be employed here.

Labels:

algebra

,

eigenvalue

,

eigenvector

,

languages

,

PageRank

Subscribe to:

Posts

(

Atom

)